Links

We live in interesting times.

3 stars

The Short Case for Nvidia Stock | YouTube Transcript Optimizer Blog

You’ve likely heard about the NVIDIA crash on Monday; some people speculate that this blog post was a major contributor, and the analysis is thorough and impressive. (And possibly also wrong — see Dario Amodei’s post later in this e-mail.) Still, you’re likely to learn important things by reading this:

Perhaps most devastating is DeepSeek's recent efficiency breakthrough, achieving comparable model performance at approximately 1/45th the compute cost. This suggests the entire industry has been massively over-provisioning compute resources. Combined with the emergence of more efficient inference architectures through chain-of-thought models, the aggregate demand for compute could be significantly lower than current projections assume. The economics here are compelling: when DeepSeek can match GPT-4 level performance while charging 95% less for API calls, it suggests either NVIDIA's customers are burning cash unnecessarily or margins must come down dramatically.

The fact that TSMC will manufacture competitive chips for any well-funded customer puts a natural ceiling on NVIDIA's architectural advantages. But more fundamentally, history shows that markets eventually find a way around artificial bottlenecks that generate super-normal profits. When layered together, these threats suggest NVIDIA faces a much rockier path to maintaining its current growth trajectory and margins than its valuation implies. With five distinct vectors of attack— architectural innovation, customer vertical integration, software abstraction, efficiency breakthroughs, and manufacturing democratization— the probability that at least one succeeds in meaningfully impacting NVIDIA's margins or growth rate seems high. At current valuations, the market isn't pricing in any of these risks.

2 stars

DeepSeek FAQ | Stratechery

So

V3is a leading edge model?It’s definitely competitive with OpenAI’s

4oand Anthropic’s Sonnet-3.5, and appears to be better than Llama’s biggest model. What does seem likely is that DeepSeek was able to distill those models to giveV3high quality tokens to train on.What is distillation?

Distillation is a means of extracting understanding from another model; you can send inputs to the teacher model and record the outputs, and use that to train the student model. This is how you get models like GPT-4 Turbo from GPT-4. Distillation is easier for a company to do on its own models, because they have full access, but you can still do distillation in a somewhat more unwieldy way via API, or even, if you get creative, via chat clients.

Distillation obviously violates the terms of service of various models, but the only way to stop it is to actually cut off access, via IP banning, rate limiting, etc. It’s assumed to be widespread in terms of model training, and is why there are an ever-increasing number of models converging on GPT-

4oquality. This doesn’t mean that we know for a fact that DeepSeek distilled4oor Claude, but frankly, it would be odd if they didn’t.Distillation seems terrible for leading edge models.

It is! On the positive side, OpenAI and Anthropic and Google are almost certainly using distillation to optimize the models they use for inference for their consumer-facing apps; on the negative side, they are effectively bearing the entire cost of training the leading edge, while everyone else is free-riding on their investment.

Indeed, this is probably the core economic factor undergirding the slow divorce of Microsoft and OpenAI. Microsoft is interested in providing inference to its customers, but much less enthused about funding $100 billion data centers to train leading edge models that are likely to be commoditized long before that $100 billion is depreciated.

Is this why all of the Big Tech stock prices are down?

In the long run, model commoditization and cheaper inference — which DeepSeek has also demonstrated — is great for Big Tech. A world where Microsoft gets to provide inference to its customers for a fraction of the cost means that Microsoft has to spend less on data centers and GPUs, or, just as likely, sees dramatically higher usage given that inference is so much cheaper. Another big winner is Amazon: AWS has by-and-large failed to make their own quality model, but that doesn’t matter if there are very high quality open source models that they can serve at far lower costs than expected.

On DeepSeek and Export Controls | Dario Amodei

From Anthropic’s CEO:

A few weeks ago I made the case for stronger US export controls on chips to China. Since then DeepSeek, a Chinese AI company, has managed to — at least in some respects — come close to the performance of US frontier AI models at lower cost.

Here, I won't focus on whether DeepSeek is or isn't a threat to US AI companies like Anthropic (although I do believe many of the claims about their threat to US AI leadership are greatly overstated). Instead, I'll focus on whether DeepSeek's releases undermine the case for those export control policies on chips. I don't think they do. In fact, I think they make export control policies even more existentially important than they were a week ago. […]

DeepSeek does not "do for $6M what cost US AI companies billions". I can only speak for Anthropic, but Claude 3.5 Sonnet is a mid-sized model that cost a few $10M's to train (I won't give an exact number). Also, 3.5 Sonnet was not trained in any way that involved a larger or more expensive model (contrary to some rumors). Sonnet's training was conducted 9-12 months ago, and DeepSeek's model was trained in November/December, while Sonnet remains notably ahead in many internal and external evals. Thus, I think a fair statement is "DeepSeek produced a model close to the performance of US models 7-10 months older, for a good deal less cost (but not anywhere near the ratios people have suggested)".

How My Trip to Quit Sugar Became a Journey Into Hell | New York Times

I love two things in this world: sugar and myself. One result of my nonstop efforts to delight myself is that I end up consuming, every day, vast quantities of sugar. Oh, my God, I forgot my husband. Sorry, I love three things in this world: sugar and myself and my husband. Now that you bring him up, my husband, who is very dear to me, is worth mentioning. He does not love sugar.

What my husband enjoys is scaling new peaks of health. My interminable quest to attain additional sugar is an inexhaustible source of stress for him. Here is a scene, variations of which play out with impressive regularity in our house: My husband sticks his head around a doorway and says something like: “There are 30 empty packets of Gushers in the trash can. Do you know anything about that?” I (completely horizontal on the couch) lock eyes with him — a capo squaring off against Quantico’s newest class clown. “I never heard anything about that in my life,” I say. I keep staring until he walks away.

The savvy reader may suspect that I exaggerate for comedic effect. Am I, a (just barely) 35-year-old woman, truly habitually eating many, many pouches of Strawberry Splash Gushers and trying to hide all evidence of this from my husband?

The Most Impressive Prediction of All Time | YouTube Transcript Optimizer Blog

Turns out the founder of YouTube Transcript Optimizer writes interesting things; who knew.

I've always been impressed when people can truly predict the future. Perhaps it's from my years working as a professional investor at hedge funds, but when someone makes a concrete, explicit prediction— particularly one that is highly contrarian and non-consensus at the time (which is the only way to make truly outsized returns in the market)— I sit up and take notice. The world is filled with people who try to indicate after the fact that they, of course, knew and predicted some outcome would happen, and that it was even obvious at the time.

But usually you will find if you do more digging that, for every such claim, there are often 3+ more predictions they made that turned out to be totally false. Or, they predicted something which, at the time they actually took an explicit stand that could be objectively judged later on, their prediction was already obvious to most people and "priced in" to the market. Or, that they made an impossibly vague and imprecise prediction, with lots of hedging and qualifications, to cover a much larger range of possible outcomes (making the predictions much less actionable).

Of course, it also isn't so impressive if the thing you are predicting is not of any practical importance in the world, such as predicting ahead of time that a particular indie musician will become a lot more popular in future years. […]

My candidate for the most impressive prediction of all time came from a person who is practically unknown in the West except for a relatively small group of historians and people interested in niche subjects. The person I'm thinking of is named Pyotr Durnovo, and he was an Imperial Russian government official who lived from 1842 to 1915.

Trump starts to break things | Noahpinion

I still have not come across even a barely-intelligent defence of these tariffs; let me know if you read one.

Before the election, I wrote a whole bunch of posts about why it was a bad idea to elect Donald Trump. But sadly, America elected him anyway. After he won, I wrote a post outlining a best-case scenario for Trump’s second term. The optimistic scenario was that Trump’s threats to enact harmful policies and cause chaos were mostly bluster, and that in the end he’d end up just waging a bunch of culture wars instead of wrecking the economy and gutting U.S. institutions.

We’re only two weeks into Trump’s presidency, and that optimistic scenario is looking more and more remote by the day. Trump is creating a lot of institutional chaos, and I’ll talk about that in a bit. Today I’m going to talk about Trump’s main economic policy: tariffs.

Loskop to Swakop: Four Days in the Namib Desert | The Radavist

Having fallen in love with cycling as a teenager while living in Namibia for a stint in the 90s, Capetonian Stan Engelbrecht has been dreaming about spending more and more time riding through the desolate desert terrain and finding creative ways to make it happen. Join Engelbrecht and a cast of characters for a bike overlanding trip through the austere Namibian landscape…

Concept Cells Help Your Brain Abstract Information and Build Memories | Quanta Magazine

Individual cells in the brain light up for specific ideas. These concept neurons, once known as “Jennifer Aniston cells,” help us think, imagine and remember episodes from our lives.

Where Do Official Medical Guidelines Come From? | ParentData

At their simplest, medical guidelines are published recommendations regarding the management of a specific health-related topic from a group of experts on that topic. There are guidelines issued on everything from whether infants should use walkers, to which antibiotics to prescribe for a urinary tract infection, to when to get screened for various types of cancers, to goals for blood pressure readings in different types of patients on blood pressure medications.

Guidelines are not written with the intention that they are interpreted and read by the general public. The intended audience for published guidelines are physicians and public health professionals who then apply the guidelines to individual people or populations. This is not always how it works. The media often cover the publication of new guidelines, and when those are controversial (for example, the 2023 guidelines for managing childhood obesity), that can generate confusion.

Why Biden failed | Silver Bulletin

He made a triple devil’s bargain: with the pandemic, his age, and a Democratic Party that can’t get its priorities straight.

It's Time To Stop Blaming Misinformation for Cynicism and Mistrust | Rob Henderson’s Newsletter

To people who are concerned that democratic societies are plagued by misinformation, this is deeply alarming. Meta, those people argue, is yielding to forces that reduce institutional trust and drive the public to accept falsehoods and conspiracy theories about climate change, vaccines, election fraud, and more. Former president Joe Biden once suggested that misinformation is “killing people.”

The fact is, though, that misinformation doesn’t actually change minds all that much.

As far back as the 2016 election, exposure to online content didn’t appear to affect elections as much as initially thought. A 2017 paper by Stanford economists Levi Boxell and Matthew Gentzkow and Brown economist Jesse Shapiro found that political polarization has been most intense among the oldest Americans, who spend the least time online. The research suggested that cable news was a more significant driver of partisan divisions. A 2018 paper from the same authors found that Trump performed worse than previous Republican candidates among internet users and people who got campaign news online and concluded that “the internet was not a source of advantage to Trump.”

Traces of Ancient Brine Discovered on the Asteroid Bennu Contain Minerals Crucial to Life | Smithsonian

Left by Evaporated Water, the Salty Residue Contains Compounds Never Observed Before in Samples From Asteroids

ChatGPT gets confused easily in Advanced Voice Mode | Understanding AI

The realtime capabilities of GPT-4o are a marvel in many ways. Conversations flow more smoothly and the video feature makes it unnecessary to manually take and upload photos.

But as we’ll see, the model has a limited ability to understand the world it sees on camera. GPT-4o cannot monitor a live video and speak up when it notices a particular object or situation. It’s easy to get the chatbot to believe things that aren’t true—even things that are flatly contradicted by the image on camera. And GPT-4o’s capacity for spatial reasoning is extremely limited.

I don’t necessarily see these mistakes as signs of deep flaws in the architecture of GPT-4o. Rather, Advanced Voice Mode feels like an early version of a product with a lot of room for improvement. I suspect that better training data will improve the model’s performance on many of these tasks. And I think it could be fairly easy to create synthetic training data for some of those tasks.

But right now, ChatGPT’s Advanced Voice Mode simply doesn’t understand its surroundings in the sophisticated way you might have assumed from viewing OpenAI’s impressive demos.

1 star

Management Mantras | Stay SaaSy

These are actually very good…

In the course of getting work done at a company, nobody should be doing anyone else any favors. Everything you do at work should be the most important thing you could be doing to add value to the business, and those things should be aided by people whose job it is to support that prioritized effort.

In the course of doing work, favors are either misunderstood responsibilities, gaps in company process or design, or people working on non-priority things. […]

This can get totally out of control to the point where people ask for raises for work that never had any impact, because they did their part. Good companies don’t allow this to happen - they fire people that can’t seem to get correlated with success.

To be 100% clear: at good companies, if the projects that you touch always seem to fail, you are guilty until proven innocent.

Giant iceberg on crash course with island, putting penguins and seals in danger | BBC News

Around the world a group of scientists, sailors and fishermen are anxiously checking satellite pictures to monitor the daily movements of this queen of icebergs.

It is known as A23a and is one of the world's oldest.

It calved, or broke off, from the Filchner Ice Shelf in Antarctica in 1986 but got stuck on the seafloor and then trapped in an ocean vortex.

Finally, in December, it broke free and is now on its final journey, speeding into oblivion.

The warmer waters north of Antarctica are melting and weakening its vast sides that extend up to 1,312ft (400m), taller than the Shard in London.

The Impact of 25% Tariffs on Canadian GDP | The Lens

The world has awakened to the power of Deepseek, the rival to OpenAI’s Model o1 that has tech stocks reeling. I played around with Deepseek for a couple of hours yesterday, and I talked with a number of friends who are already using it to do some pretty advanced coding and problem solving.

Early this morning, Apollo’s Torsten Slok shared a new report from the Bank of Canada. He highlighted a simulation the Bank ran to assess the potential impact on Canada if the US imposes a 25% tariffs on all exports into the United States. The results show that Canadian GDP would decline by a whopping 6%.

I wondered whether Deepseek would come up with a similar estimate. So I asked it.

Politics is Cruelty | Bet On It

Without McCloud, you might sense a contradiction between these promises of joy and threats of anger. But even as a matter of basic anatomy, human beings are entirely capable of feeling joy and anger all at once. And to repeat, we have a name for this emotional fusion: Cruelty.

Cruelty is the main emotion that politicians pander to. And cruelty is what every politician strives to deliver. They don’t want to make everyone happy. They want to make their friends happy by making their enemies suffer. Which requires them to not only identify enemies, but create an endless queue of enemies lest they run out.

Peace and Free Trade | Marginal Revolution

The effect of free trade on war was perhaps most pithily summarized by the aphorism “when goods don’t cross borders, soldiers will.” In Territory flows and trade flows between 1870 and 2008 Hu, Li and Zhang offer supporting evidence.

The Japanese Salaryman Eraser Goes Bald Through Use | Kottke

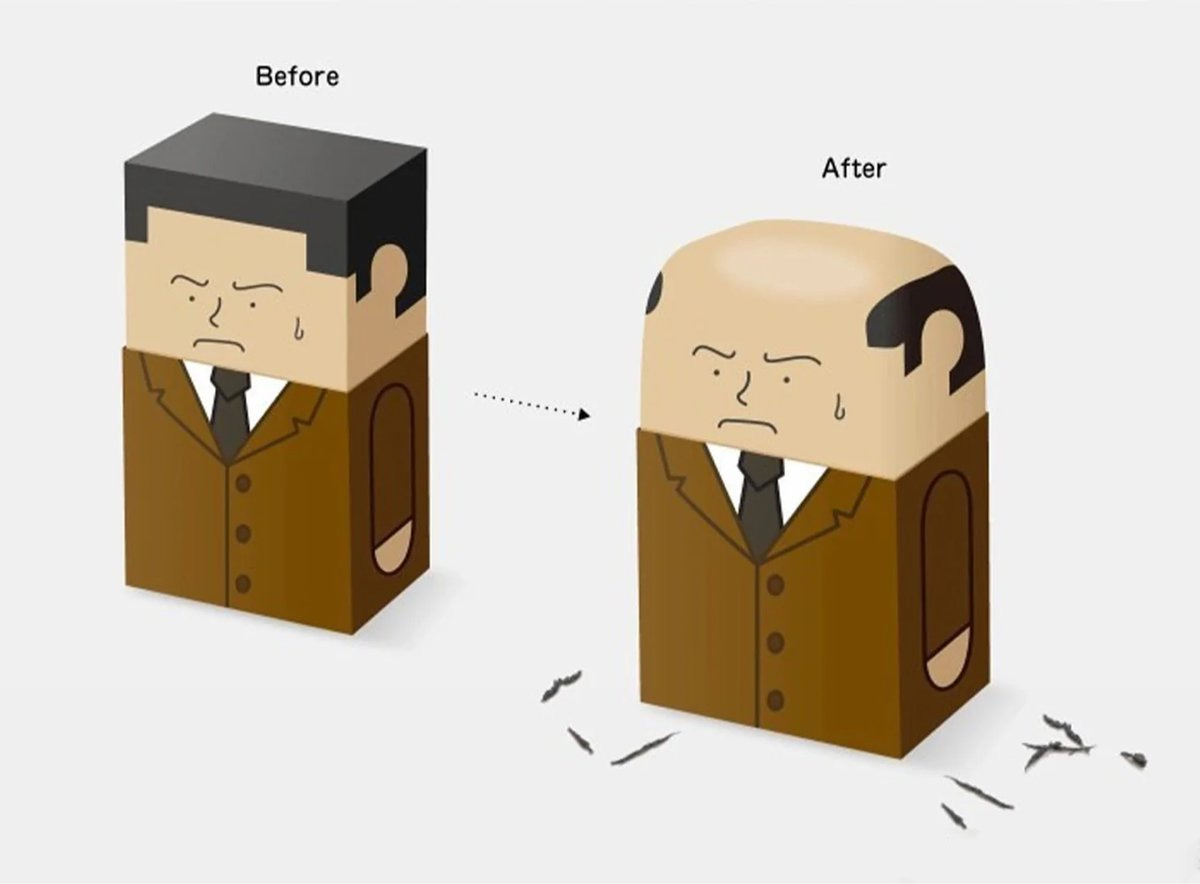

Designed by Kazuya Ishikawa, the clever Salaryman Eraser features a Japanese businessman who goes bald as you use the eraser.